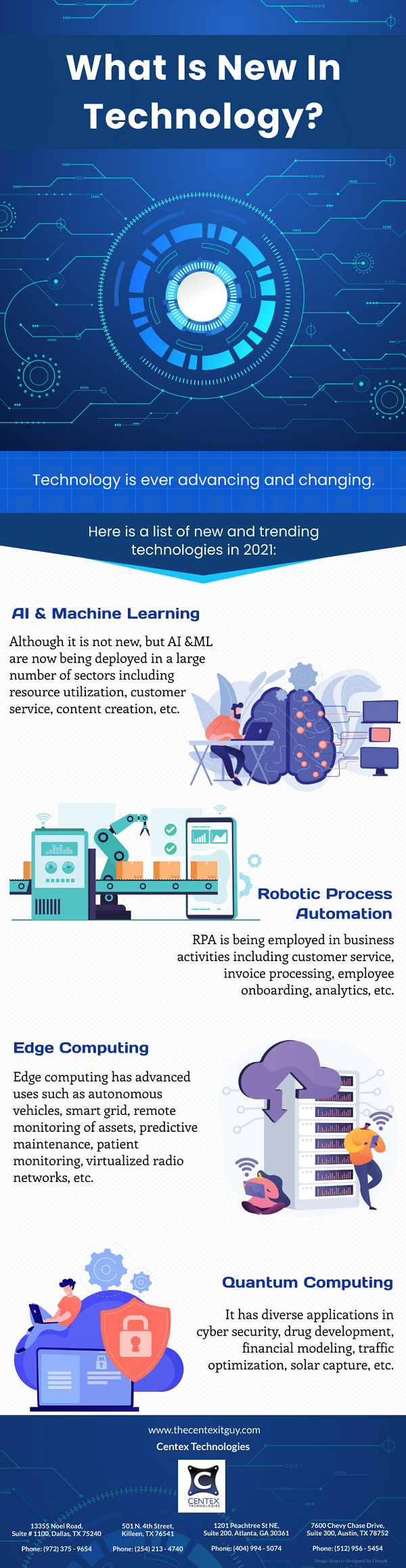

Edge computing has emerged as a groundbreaking paradigm, transforming how data undergoes processing, storage, and utilization. This revolutionary approach decentralizes data processing by placing computation and storage closer to where it’s required, reducing latency and improving efficiency. Instead of transmitting data to a centralized cloud server, processing occurs on or near the data source.

Operational Mechanism

Devices embedded with computing capabilities, like IoT devices, gateways, or edge servers, conduct data processing and analysis locally. This minimizes data transit time, optimizing bandwidth, crucial for time-sensitive applications.

Benefits of Edge Computing

Edge computing is a unique technology reshaping how data is managed and utilized. Below are some of its notable benefits:

- Reduced Latency and Faster Processing: Processing data closer to its source significantly reduces latency, making it an ideal choice for real-time applications like autonomous vehicles and healthcare monitoring.

- Bandwidth Optimization: Minimizing data sent to the cloud optimizes bandwidth usage, reducing network congestion, particularly in scenarios dealing with extensive data streams.

- Enhanced Security and Privacy: Processing data at the edge minimizes exposure during transit, thereby enhancing security and ensuring privacy compliance.

- Scalability and Flexibility: Edge computing’s distributed nature facilitates easy scalability, adapting to fluctuating data volumes and supporting diverse applications.

- AI and Machine Learning Integration: Integrating AI and machine learning at the edge enables intelligent real-time decision-making.

- Tailored Industry Applications: The versatility of edge computing allows tailored solutions across various sectors, from manufacturing and healthcare to smart cities and retail.

- Immediate Edge Analytics: Edge analytics offers real-time analysis at the source, providing immediate insights without the need for central data transmission, beneficial for predictive maintenance and critical infrastructure monitoring.

- Resilience in Connectivity-Limited Environments: Edge computing’s resilience in environments with limited cloud connectivity ensures continued operation, making it suitable for remote or off-grid locations and IoT devices in remote areas.

- Cost Efficiency: By reducing data transmission to the cloud, edge computing potentially decreases associated cloud service costs.

- Improved User Experience: Edge computing-powered applications enhance user experiences, especially in online gaming and video streaming, ensuring smoother and more responsive interactions.

- Tailored Edge-Native Applications: Designing applications specifically for edge computing architecture optimizes performance for edge devices, enhancing efficiency.

- Innovation Enabler: Edge computing fosters the development of novel applications and services, supporting innovation in remote healthcare diagnostics, autonomous vehicles, and immersive experiences.

Challenges and Considerations

While edge computing boasts numerous advantages, it’s essential to address its challenges:

- Infrastructure Constraints: Establishing robust edge infrastructure demands significant investments in hardware, network resources, and maintenance.

- Standardization and Interoperability: Developing uniform standards and ensuring interoperability across various edge devices and platforms remains challenging.

- Data Management and Governance: Decentralized data processing raises concerns about governance, integrity, and compliance with regulatory frameworks.

- Security Vulnerabilities: Distributing computing power across multiple nodes increases the attack surface, necessitating robust security measures.

Centex Technologies provides advanced IT systems for enterprises. To know more, contact Centex Technologies at Killeen (254) 213 – 4740, Dallas (972) 375 – 9654, Atlanta (404) 994 – 5074, and Austin (512) 956 – 5454.